The impacts of LLMs on people’s lives (Grok, Gemini, ChatGPT) — why we rely on them more, their upsides and downsides, and how to balance human ⇄ AI interactions

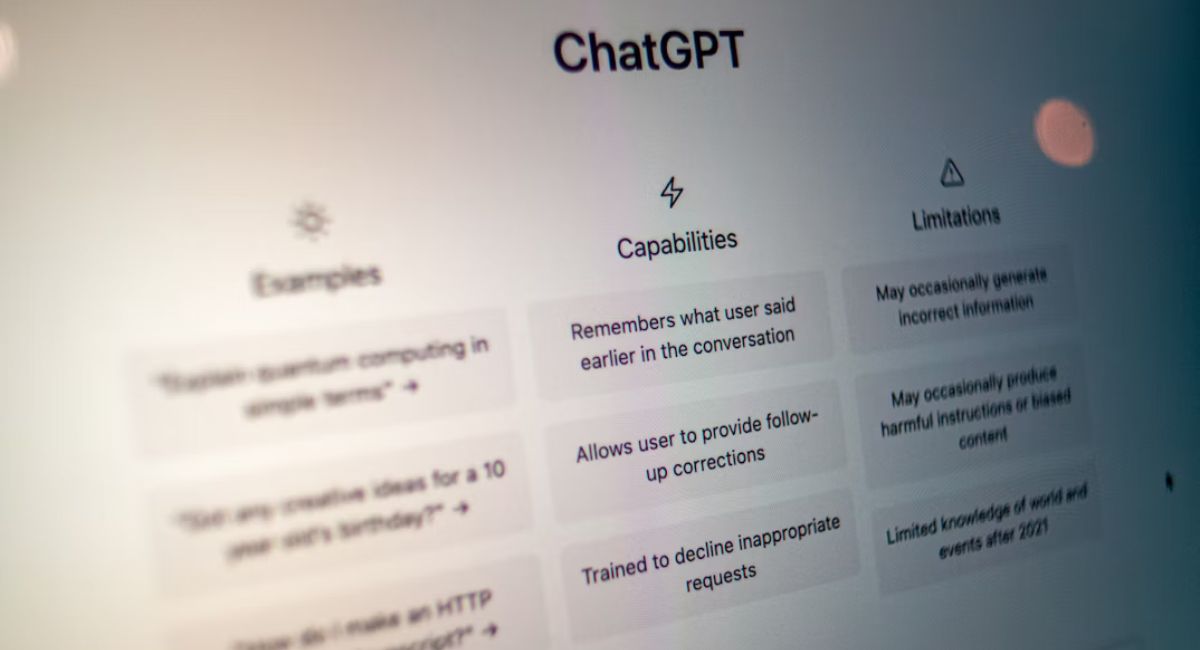

Large language models (LLMs) such as Grok (Anthropic/consumptive variants), Google’s Gemini, and OpenAI’s ChatGPT have moved from curiosity-driven demos to everyday tools that billions of people touch — for work, learning, creativity, therapy-like companionship, and decision support. Their influence is rapid and broad: they change what tasks look like, what skills matter, and how people relate to information and to one another. Below I summarize the major impacts (with studies and quotes), why reliance is rising, the main benefits and harms that researchers and leaders are flagging, and practical guardrails for balancing human agency with AI assistance.

Why people increasingly rely on LLMs

- Speed + accessibility of expertise. LLMs compress large amounts of text into conversational answers and step-by-step help — from drafting code to explaining medical concepts — making “expert-like” support available to non-experts instantly. Usage surveys show rapid uptake: e.g., about 34% of U.S. adults had tried ChatGPT as of mid-2025, roughly double the share in 2023. Pew Research Center

- Productivity gains at scale. Studies and industry trials (GitHub Copilot, Microsoft research) report measurable developer productivity and satisfaction gains when generative AI assists routine work. McKinsey and other consultancies estimate massive productivity potential across industries (trillions in economic value). That promise pushes companies and professionals to adopt LLM tools quickly. The GitHub Blog+1

- Better performance on specialized tasks. LLMs have demonstrated surprising competence on knowledge-intensive benchmarks — e.g., GPT-series models performing at or near passing thresholds on medical licensing exams (USMLE) in peer-reviewed studies — which increases trust for some use cases (though with important caveats). PMC+1

- Product integrations and platform shifts. Big tech leaders argue we’re in an “AI platform shift” where AI gets embedded in search, office tools, and apps; that further normalizes LLM use (Sundar Pichai and others have framed this as the next platform moment). blog.google+1

The upsides (what LLMs are doing well)

- Democratizing knowledge: People without specialist training can get clear explanations, code snippets, lesson plans, business outlines, and summaries — lowering friction to learning and experimentation. (Pew and adoption data reflect growing public access.) Pew Research Center

- Boosting productivity: Routine writing, drafting, debugging, and research work gets faster. Companies report time savings and employees report higher focus on “satisfying” tasks. The GitHub Blog+1

- New creative workflows: Artists, writers, designers, and marketers use LLMs as collaborators — ideation partner, first draft generator, or code prototyper — speeding iteration cycles. McKinsey & Company

- Aid in education & training: LLMs can provide individualized explanations and practice problems, acting as tutors that scale. Research shows model performance on standardized professional exams, indicating potential as training aids (with supervision). PMC

- Operational automation for businesses: Content generation, customer-support triage, and knowledge retrieval become cheaper and faster, enabling smaller teams to do more. McKinsey quantifies large economic upside if responsibly adopted. McKinsey & Company

The downsides and serious risks

- Hallucinations and misinformation. LLMs can produce plausible but false statements (a persistent technical limitation). Without critical verification, users may act on incorrect or harmful advice. Stanford’s AI Index and other analyses emphasize the uneven standards and lack of common evaluation for model responsibility. Stanford HAI+1

- Overtrust and deskilling. If people accept model outputs uncritically, they can lose practice at verification, critical thinking, and domain expertise. Psychologists and sociologists warn about “substituting” real human interaction and judgment with machine answers. Sherry Turkle’s work — historically on digital intimacy and technology’s reshaping of relationships — is often invoked to caution that simulated companionship is not the same as human connection. Goodreads+1

- Safety and high-stakes errors. In domains like healthcare, finance, and legal advice, hallucinations can cause material harm. Even if models pass exams in controlled conditions, that doesn’t mean they are ready for unsupervised real-world decision-making. Medical studies show capability but also signal the need for oversight. PMC+1

- Privacy and data-use concerns. Users often supply sensitive information; how data are stored, used, or re-ingested into models raises both policy and personal privacy issues. Regulatory frameworks are still catching up. (Pew and policy surveys show public desire for more control and regulation.) Pew Research Center

- Mental-health and social effects. Health providers and institutions have warned against using chatbots as a substitute for professional therapy; there have been troubling incidents (and ongoing legal/ethical debates) about chatbots interacting with vulnerable people. Leaders at places like the NHS have cautioned against unregulated use for therapy. The Times+1

- Concentration of power & misalignment concerns. Several tech leaders and AI researchers have flagged existential or systemic risks if governance and incentives aren’t aligned — Elon Musk, Geoffrey Hinton, and other prominent figures have called for caution or regulation. At the same time, CEOs like Sundar Pichai and Sam Altman publicly emphasize both benefits and the need for responsibility. Fortune+2blog.google+2

What leading figures and institutions are saying (short quotes & summaries)

- Sundar Pichai (Google): framed current developments as an “AI platform shift” and urged bold but responsible adoption. blog.google+1

- Sam Altman (OpenAI): has acknowledged real-world harms and the need for stronger safety measures; OpenAI is exploring new safeguards for vulnerable users. (Recent reporting describes Altman considering authorities/parent notifications in severe cases.) The Guardian+1

- Elon Musk and other skeptics: have repeatedly warned about risks and argued for governance and oversight to avoid catastrophic outcomes. Fortune

- Stanford HAI / AI Index & McKinsey: supply data-driven overviews showing rapid model proliferation, big economic opportunity, but also a lack of standardized responsibility testing and substantial workforce change ahead. Stanford HAI+1

- Psychology voices (Sherry Turkle, APA reporting): point to changes in intimacy, attention, and the social function of conversation — urging caution about mistaking simulated empathy for human care and urging boundaries in therapeutic contexts. Goodreads+1

How to balance Human ⇄ AI interactions — practical guidance

Below are actionable rules for individuals, educators, product teams, and policymakers to get benefits while managing risks.

For individual users

- Treat LLM output as advice, not authority. Always verify facts, ask for sources, and cross-check with trusted domain resources for high-stakes decisions (health, legal, safety).

- Keep human judgment in the loop. Use AI to draft, explore options, or summarize — make decisions yourself or consult a qualified human professional.

- Protect privacy: avoid feeding personally identifying or sensitive data into public chat interfaces unless the platform explicitly states how that data will be handled.

- Limit emotional reliance. For companionship or therapy needs, prefer qualified humans; use chatbots for low-risk informational support only. Psychologists warn that AI can offer illusionary intimacy but cannot meet complex human emotional needs. Goodreads+1

For teams and businesses

- Adopt a human-in-the-loop workflow for decisions with consequences. Automatic deployment of model outputs into production without checks is unsafe. Use human signoff and logging.

- Test for hallucinations and bias systematically. Stanford’s AI Index highlights the absence of standardized responsibility testing; teams should run domain-specific benchmarks and adversarial tests. Stanford HAI

- Provide transparency & provenance. Tag AI-generated content, log prompts and model versions, and offer citations or links where possible.

- Train users and measure outcomes. Microsoft/GitHub research shows it takes time (weeks) for teams and individuals to adopt and fully benefit from AI tools; invest in enablement and measurement. GitHub Resources+1

For educators and policymakers

- Teach verification skills and prompt literacy. As LLMs become ubiquitous, curricula must teach how to interrogate model outputs, check sources, and reason about uncertainty.

- Regulate where harm is probable. Health, child safety, and high-risk decision contexts should have stricter standards for AI use; public sentiment supports more control and better regulation. Pew Research Center+1

- Support safety research and independent audits. Fund third-party evaluations, red-team exercises, and open benchmarks so the public can compare models on safety, truthfulness, and fairness. Stanford and other institutions emphasize the need for shared evaluations. Stanford HAI

A short checklist for responsible everyday use

- Who will be harmed if this output is wrong? (If anyone: verify.)

- Did the model cite verifiable sources? (If not: cross-check.)

- Is this a private/sensitive case? (If yes: don’t paste sensitive data.)

- Is this replacing a human relationship or professional? (If yes: rethink boundaries.)

- Keep a copy of prompts & outputs for traceability when results affect others.

Closing — balancing enthusiasm with humility

LLMs like Grok, Gemini, and ChatGPT bring real and measurable benefits: faster work, broader access to knowledge, and novel creative possibilities. But they also carry real risks — hallucination, privacy pitfalls, social effects, and the potential for serious harm when used without oversight. Leading companies and researchers acknowledge both sides: a platform shift is underway, but it must be governed, audited, and integrated with strong human oversight. blog.google+1

The right path forward is neither technophobia nor blind acceptance. It’s disciplined adoption: amplify human capabilities where AI excels, enforce human accountability where mistakes matter, and invest in education, evaluation, and governance so society — not only a handful of companies — shapes how these powerful tools affect our lives.